Issues filter

timvideos/getting-started

[LiteX+HDMI2USB] Improve NuttX on LiteX generated SoC & replace HDMI2USB bare metal firmware

Issue body

[LiteX+HDMI2USB] Improve NuttX on LiteX generated SoC & replace HDMI2USB bare metal firmware

Brief explanation

The HDMI2USB project uses “bare metal” firmware running on a soft CPU inside the FPGA. We would like to replace this code with something running on a proper operating system to make it easier to add new features. NuttX is one such option for a lightweight OS.

Expected results

All current HDMI2USB based functionality now works with a firmware running NuttX rather than bare metal C code.

Detailed Explanation

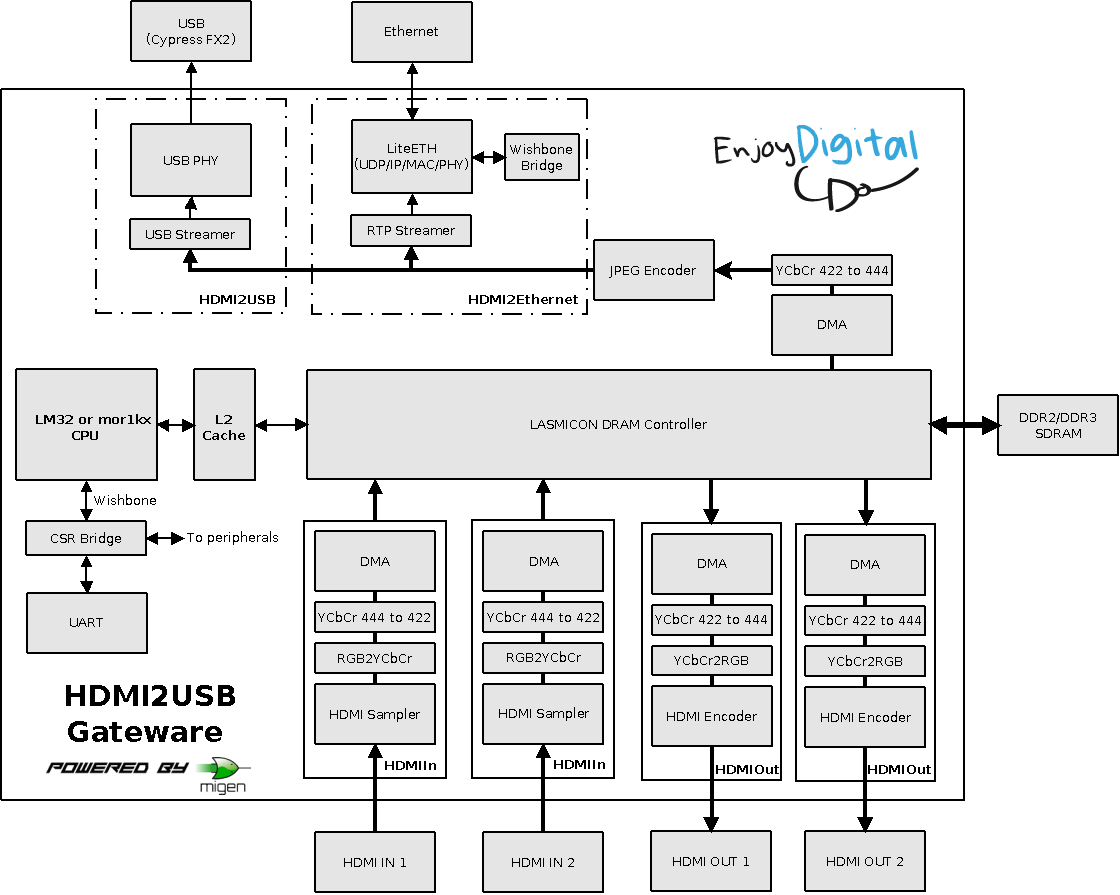

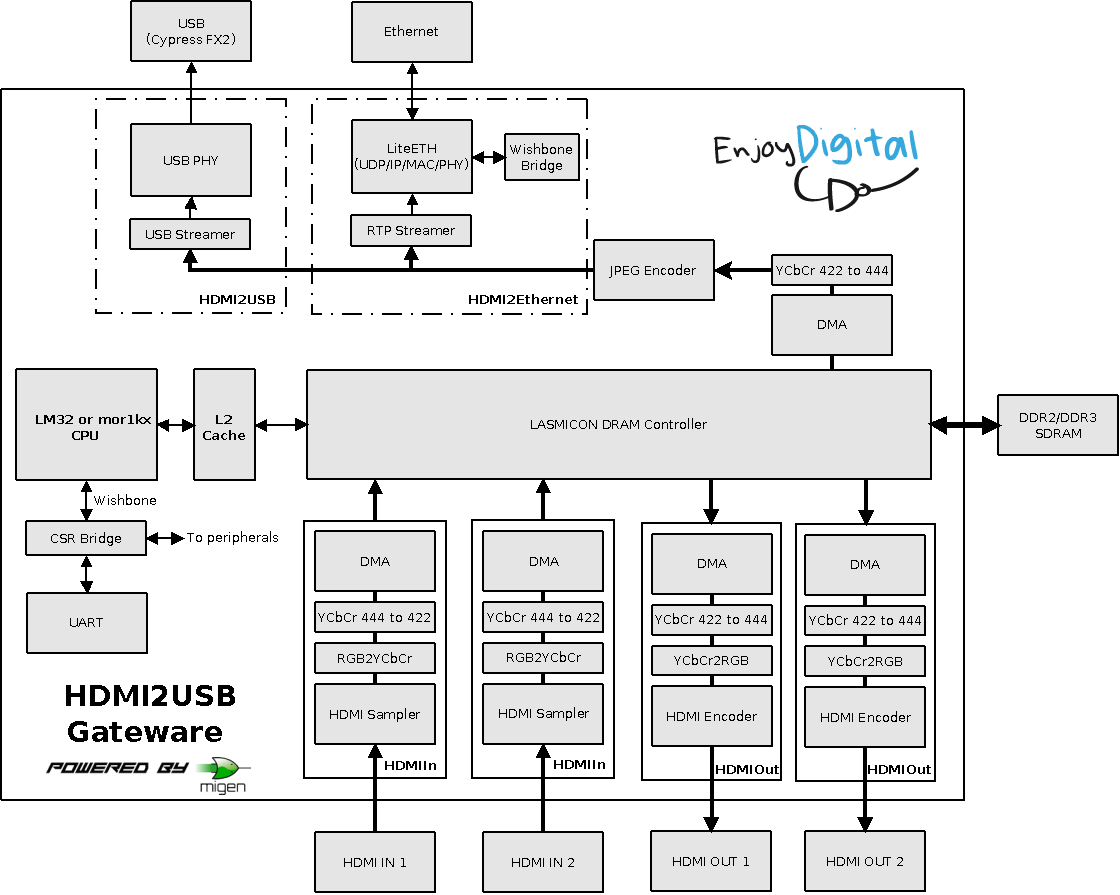

The HDMI2USB-misoc-firmware embeds a LM32 soft-core for controlling and configuring the hardware. See the diagram below;

This soft-core should be able to run NuttX. NuttX is a real-time operating system (RTOS) with an emphasis on standards compliance and small footprint.

A bunch of work has been done on making NuttX work on LiteX.

Further reading

- http://www.nuttx.org/Documentation/NuttX.html#misoclm32

-

http://nuttx.yahoogroups.narkive.com/uAcgVEfZ/misoc-lm32-port

- [LiteX] QEmu simulation of a LiteX generated SoC

- [HDMI2USB] Port Linux to the lm32 CPU and support HDMI2USB firmware functionality

Knowledge Prerequisites

- List of

- what knowledge is required

- to complete this project.

Contacts

- Potential Mentors: @shenki @mithro @enjoy-digital

- Mailing list: [email protected]

Issue details

[LiteX] QEmu simulation of a LiteX generated SoC

Issue body

[LiteX] QEmu simulation of a LiteX generated SoC

More technical details at Issue #86: Get lm32 firmware running under qemu to enable testing without hardware on HDMI2USB-misoc-firmware repo

Brief explanation

Currently to do firmware development you need access to FPGA hardware. Using QEmu it should be possible to enable developers to develop and improve the firmware without hardware.

Expected results

People are able to write new features for the HDMI2USB firmware and develop MicroPython for FPGAs using QEmu.

Detailed Explanation

LiteX QEmu Simulation support Random Notes Document

Further reading

Knowledge Prerequisites

- List of

- what knowledge is required

- to complete this project.

Contacts

- Potential Mentors: @shenki

- Mailing list: [email protected]

Issue details

[LiteX] Improve support for project IceStorm (Lattice ICE40 + OpenFPGA toolchain)

Issue body

[LiteX] Improve support for project IceStorm (Lattice ICE40 + OpenFPGA toolchain)

Brief explanation

The first fully FOSS toolchain for FPGA development targets the Lattice ICE40 FPGA. LiteX has some support for this toolchain but it hasn’t been very tested.

Expected results

LiteX has good support for the IceStorm work flow and ICE40 based development boards. At least the LM32 and RISC-V soft CPUs should work and many of the peripherals should work.

Detailed Explanation

- TODO.

Further reading

- IceStorm Toolchain

- WhiteQuark’s - Implementing a simple SoC in Migen which uses IceStorm.

- icoBoard - FPGA based IO board for RPi

Knowledge Prerequisites

- List of

- what knowledge is required

- to complete this project.

Contacts

- Potential Mentors: @{{github mentor username}}

- Mailing list: [email protected]

Issue details

[LiteX] Create a generic debug interface for soft-CPU cores and connect to GDB

Issue body

[LiteX] Create a generic debug interface for soft-CPU cores and connect to GDB

Brief explanation

While doing software development being able to use gdb to find out what the CPU is doing is really useful. We would like that for LiteX’s multiple soft-CPU implementations running both on real hardware and in simulation.

Expected results

GDB is able to control the soft-CPU implementations on both on real hardware and in simulation.

Detailed Explanation

The student will need to implement the parts shown in blue in the following diagram;

Further reading

Knowledge Prerequisites

- List of

- what knowledge is required

- to complete this project.

Contacts

- Potential Mentors: @{{github mentor username}}

- Mailing list: [email protected]

Issue details

[LiteX] Create a litescope based "Integrated Bit Error Ratio Tester" (iBERT) clone

Issue body

[LiteX] Create a litescope based “Integrated Bit Error Ratio Tester” (iBERT) clone

Brief explanation

Xilinx has a logicore called iBERT for doing testing of error rates on high speed channels. The task is to create a similar tool based on LiteX and LiteScope.

Expected results

Gateware can be generated for a given board with high speed transceivers and a GUI tool on the computer can be used to examine error rates over the transceivers using different settings.

Detailed Explanation

This project has three parts;

a) Data sequence generators + checkers. These generate given bit data stream, then after transmission and receiving check that the bit data stream is correct.

b) Data channel wrappers. These give you a common interface to controlling the parameters of a channel used in transmission and receiving. For simple data channels this might just provide clock control. For more advanced channels, like the high speed transceivers, this provides things like controlling parameters like pre-emphasis, equalisation, etc.

c) Host computer Control GUI / Console. This gives a nice interface for controlling all the parameters and seeing the results of various tests. This is the Xilinx iBERT Console ->

The student is expected to create all three parts of this tool reusing litescope and litex for the FPGA<->Host communication and development. The work can be seen in the following diagram, the parts in blue need to be developed by the student.

Further reading

- LiteX

- LiteScope

- ChipScope Pro Tutorial: Using an IBERT Core with ChipScope Pro Analyzer

- Enjoy Digital Transceiver Test Repo

Knowledge Prerequisites

- This project requires access to FPGA hardware with high speed transceivers.

Contacts

- Potential Mentors: @mithro, @enjoy-digital

- Mailing list: [email protected]

Issue details

[LiteX] Add support for ZPU / ZPUino in MiSoC

Issue body

Brief explanation

MiSoC currently supports the lm32 and mor1k architectures. It would be nice if it also support the ZPU soft core (and the ZPUino peripherals).

## Expected results

MiSoC is able to use a CPU core which supports the ZPUino architecture as an option when building SoC components.

# Detailed Explanation

## ZPU - The Zylin ZPU

ZPUino is a SoC (System-on-a-Chip) based on Zylin’s ZPU 32-bit processor core.

The worlds smallest 32 bit CPU with GCC toolchain.

The ZPU is a small CPU in two ways: it takes up very little resources and the architecture itself is small. The latter can be important when learning about CPU architectures and implementing variations of the ZPU where aspects of CPU design is examined. In academia students can learn VHDL, CPU architecture in general and complete exercises in the course of a year.

## Further reading

- https://github.com/m-labs/misoc

- https://en.wikipedia.org/wiki/ZPU_(microprocessor)

- https://github.com/zylin/zpu

Issue details

[LiteX] Finish support for RISC-V in LiteX

Issue body

Brief explanation

LiteX currently supports the lm32 and mor1k architectures and has some preliminary support for RISC-V. It would be nice if it support the RISC-V architecture fully.

Expected results

MiSoC is able to use a CPU core which supports the RISC-V architecture as an option when building SoC components.

# Detailed Explanation

## RISC-V

RISC-V (pronounced “risk-five”) is a new instruction set architecture (ISA) that was originally designed to support computer architecture research and education and is now set to become a standard open architecture for industry implementations under the governance of the RISC-V Foundation. RISC-V was originally developed in the Computer Science Division of the EECS Department at the University of California, Berkeley.

## Process

Steps would be;

- Figure out the best RISC-V compatible core to use.

- Figure out the toolchain needed.

- Import into MiSoC.

Some potential options are;

- https://github.com/cliffordwolf/picorv32 - PicoRV32 - A Size-Optimized RISC-V CPU

- http://fpga.org/grvi-phalanx/ ?

## Further reading

- https://github.com/m-labs/misoc

- http://riscv.org/

- http://www.lowrisc.org/

- https://en.wikipedia.org/wiki/RISC-V

Issue details

[LiteX] Add support for j2 (j-core) to LiteX

Issue body

Brief explanation

LiteX currently supports the lm32, mor1k & risc-v architectures. It would be nice if it also support the J2 open processor.

Expected results

LiteX is able to use the J2 core as an option when building SoC components.

Detailed Explanation

## J2 open processor

This page describes the j-core processor, a clean-room open source processor and SOC design using the SuperH instruction set, implemented in VHDL and available royalty and patent free under a BSD license.

The current j-core generation, j2, is compatible with the sh2 instruction set, plus two backported sh3 barrel shift instructions (SHAD and SHLD) and a new cmpxchg (mnemonic CAS.L Rm, Rn, @R0 opcode 0010-nnnn-mmmm-0011) based on the IBM 360 instruction. Because it uses an existing instruction set, Linux and gcc and such require only minor tweaking to support this processor.

## Further reading

- http://j-core.org/

- http://lists.j-core.org/

- https://github.com/m-labs/misoc

- https://github.com/enjoy-digital/litex

Issue details

[HDMI2USB] Add "hardware mixing" support to HDMI2USB firmware

Issue body

[HDMI2USB-misoc-firmware 27] Add “hardware mixing” support to HDMI2USB firmware

More technical details at following github issue

# Brief explanation

Add support for mixing multiple input sources (either HDMI or pattern) together and output via any of the outputs.

## Expected results

The firmware command line has the ability to specify that an output is the combination of two inputs. These combinations should include dynamic changes like fading and wipes between two inputs.

# Detailed Explanation

The “Hardware Fader Design Doc” includes lots of information about how this stuff could be implemented.

| You should read up about how to properly combined pixels in linear gamma space. All mixing should be done in linear gamma space. (http://www.poynton.com/PDFs/GammaFAQ.pdf | http://www.poynton.com/notes/colour_and_gamma/GammaFAQ.html) |

It might be useful to read up about the original Milkymist One firmware and the TMU (Texture Mapping Unit) used in that.

## Further reading

- Examples in the mixxeo-soc which could be of use.

- HDMI2USB Website

- Migen / MiSoC

- HDMI2USB-misoc-firmware

# Contacts

- Potential Mentors: @mithro @enjoy-digital @shenki

- Mailing list: [email protected]

Issue details

[HDMI2USB] Get Milkymist "Video DJing" functionality on the Numato Opsis board

Issue body

Get Milkymist “Video DJing” functionality on the Numato Opsis board

# Brief explanation

The Milkymist board provided a bunch of cool Video DJing output options (see following pictures), we want the same type of thing working on the Numato Opsis board.

## Expected results

The Numato Opsis when combined with a Milkymist Expansion board is able to reproduce all functionality of the original Milkymist device.

# Detailed Explanation

Very detailed explanation of the options around implementation can be found in the following Google Doc;

https://docs.google.com/document/d/1YyhCqTaUrI_vQjNGHJ34TsJCoDwpMzd2XcavAEllrUg/edit#

## Further reading

- HDMI2USB Website

- Migen / MiSoC

- Numato Opsis board

- TOFE Milkymist Board

- HDMI2USB MiSoC Firmware

# Knowledge Prerequisites

- C coding experience

- Verilog experience very useful

# Contacts

- Potential Mentors: @mithro @enjoy-digital @shenki

- Mailing list: [email protected]

Issue details

[HDMI2USB] Allow HDMI2USB devices to act as HDMI extenders via the Gigabit Ethernet port

Issue body

More technical details at HDMI2USB-misoc-firmware#133: Allow HDMI2USB devices to act as HDMI extenders via the Gigabit Ethernet port

# Brief explanation

Allow two HDMI2USB devices which are connected by Gigabit Ethernet to stream video from one device to the other.

# Detailed Explanation

It should be possible to stream the pixel data from one board to another board via Ethernet.

Gigabit Ethernet should be fast enough to transfer 720p60 video at raw pixel data (when using YUV encoding).

Both the Numato Opsis and Digilent Atlys boards have Gigabit Ethernet interfaces. The HDMI2USB-misoc-firmware already supports both these interfaces using liteEth.

As the two devices will be directly connected, you can use raw Ethernet frames rather than UDP/IP. It might be easier for debugging to support UDP/IP.

## Further reading

- HDMI2USB Website

- Migen / MiSoC

- liteEth

- HDMI2USB-misoc-firmware

- hdmi2eth target

# Contacts

- Potential Mentors: @mithro @enjoy-digital @shenki

- Mailing list: [email protected]

Issue details

[HDMI2USB] Convert the JPEG encoder from VHDL to Migen/MiSoC

Issue body

Brief explanation

The HDMI2USB firmware currently has a JPEG encoder written in VHDL. We would prefer it be written in Migen / MiSoC to allow better integration into the firmware.

## Expected results

The HDMI2USB MiSoC Firmware correctly generates JPEG output using new Migen / MiSoC JPEG encoder.

The new Migen / MiSoC JPEG encoder has a strong test suite.

# Detailed Explanation

The current JPEG encoder is based on mkjpeg from opencores but has been slightly modified by @enjoy-digital and @ajitmathew. The current encoder has the following problems;

- It is written in VHDL which means;

- Isn’t easy to simulate using FOSS tools,

- An adapter between migen/misoc is needed,

- It has been the cause of numerous bugs.

- It doesn’t have a strong test suite making modification hard.

Using Migen / MiSoC would allow a lot of better testing.

As there is an existing implementation of the encoder, it makes the conversion process a simpler. cfelton’s JPEG encoder example might be useful source too.

## Further reading

- Replace VHDL jpeg encoder with Verilog version

- Migen / MiSoC

- cfelton’s JPEG MyHDL repo

- JPEG encoding

- mkjpeg from opencores

# Contacts

- Potential Mentors: @shenki @mithro @enjoy-digital

- Mailing list: [email protected]

Issue details

[HDMI2USB] Port Linux to the lm32 CPU and support HDMI2USB firmware functionality

Issue body

Brief explanation

The HDMI2USB gateware currently includes a lm32 soft core. The misoc version has an MMU and should support running a full Linux kernel.

## Expected results

Linux booting on the HDMI2USB gateware.

# Detailed Explanation

There is a bunch of extra information in the LiteX Linux Support Random Notes Google Doc.

The HDMI2USB-misoc-firmware embeds a LM32 soft-core for controlling and configuring the hardware. See the diagram below;

This soft-core should be able to run Linux Kernel, which means we would get access to a lot of good things;

- Access to well tested TCP/IP stack (useful for IP based streaming).

- Access to well tested USB stack (useful for the USB-OTG connector).

- Access to kernel mode setting for edid processing and DisplayPort stuff.

Work on this was started by the MilkyMist / M-Labs people.

There is a port of the lm32 to qemu which will help, see https://github.com/timvideos/HDMI2USB-misoc-firmware/issues/86

Further reading

- https://en.wikipedia.org/wiki/LatticeMico32

- https://lwn.net/Articles/647949/

- http://m-labs.hk/milkymist-wiki/wiki/index.php%3Ftitle=Milkymist_Linux_cheat_sheet.html

- https://wiki.debian.org/HelmutGrohne/rebootstrap#lm32

- www.latticesemi.com/view_document?document_id=28118 - Linux Port to LatticeMico32 System Reference Guide

- http://www.ubercomp.com/jslm32/src/ - Javascript LatticeMico32 Emulator (runs Linux) - Ubercomp

# Knowledge Prerequisites - Linux Kernel knowledge

- Strong C knowledge.

Issue details

[streaming-system] Automated build and configuration of full streaming system

Issue body

Brief explanation

System to build and configure all of the machines needed to record and stream a multi track conference.

Full automated deployment of both the collecting infrastructure (parts inside a conference venue / user group venue) and the off site infrastructure (parts in the cloud).

## Expected results

Convert existing scripts and manual processes to a consistent and modular deployment system templates.

Demonstrate it working in VMs.

# Detailed Explanation

## Problem

A 25 track conference needs about 60 physical and 30 virtual machines. Each needs an os installed, host name assigned, networking that plays well with the conference infrastructure, various applications installed and configured.

## Setup

For production there will be a physical box with Debian stable, access to a standard Debian repository, sudo user, a master config file, file of secrets and a script. The script will install and configure the needed services to provide automated pxe install for the remaining machines and provision and configure the vm’s.

For testing, a single physical box running lots of vms that replicates the production setup. This adds a layer of complexity and such, but is the only sensible way to test and maintain and enhance such a system.

## Configuration

Everything possible will be contained in the master config file. There should be no editing additional config files by hand. Part of this project will be to determine what all needs to be included in the master config file. The file will likely be created by the veyepar system which will be responsible for transforming the conference schedule into a consistent format and providing a UI to manage such things as:

Talks in the room that the attendees know as “UB2.252A (Lameere)” will have a url of www.timvideos.com/fosdem2014/lameere ad an irc channel of #fosdem-lameere; and the video mixer machine has a mac address of 00:11:22…, content source machines: [22:33:44… 44:55:66…], host names: lameere-mixer, lameere-source-1 and -2, and somehow specifying if the encoders will be EC2, RackSpace or local vm’s.

## Pieces

- collecting infrastructure

- dvswitch or gst-switch

- flumotion collector (watchdog, register, etc)

- streaming infrastructure (EC2)

- timvideos frontend website

- timvideos backend website

- timvideos support (IRC log bot, etc)

- flumotion encoder (watchdog, register, etc)

## Further reading

Existing build systems that do quite a bit of what is needed:

https://github.com/yoe/fosdemvideo/blob/master/doc/README

https://github.com/CarlFK/veyepar/blob/master/setup/nodes/pxe/README.txt

https://github.com/timvideos/streaming-system/blob/master/tools/setup/runall.sh

# Knowledge Prerequisites

- Good understanding of deploying machines and systems such as PXE boot.

# Contacts

- Potential Mentors: @CarlFK @codersquid @iiie

- Mailing list: [email protected]

Issue details

[gstreamer] Create a gstreamer plugin for Lenkeng HDMI over IP extender

Issue body

Brief explanation

Create a gstreamer plugin for sending and receiving from Lenkeng LKV373 (and compatible) devices.

You’ll need to get a Lenkeng LKV373 as part of this project!

## Expected results

- Two gstreamer plugins;

- lkv373-sink – Sends data from gstreamer to the output part of the device.

- lkv373-src – Receives data from the device and sends into gstreamer.

- Documentation on using the two gstreamer plugins

- Technical documentation of LKV373 protocol

## Detailed explanation

FIXME: Add more information here.

You may need to get out a soldering iron so you can access the serial port on the device.

# Detailed Explanation

There’s a HDMI extender device, Lenkeng LKV373, which captures HDMI, and transcodes the video into MJPEG and outputs the stream over multicast UDP.

They’re designed to run as a pair of sender + receiver, but someone has reverse engineered the wire protocol, and written some notes on reverse engineering the units:

http://danman.eu/blog/?p=110

## Further reading

- https://github.com/timvideos/HDMI2USB/wiki/Alternatives#wiki-ethernet-capture-devices

- Example code – Python

- Example code – Mixer GUI

- GStreamer Plugin Writer’s Guide

- DVSwitch gstreamer plugin – Simple example plugin.

# Knowledge Prerequisites

- Strong coding skills. (C experience preferred!)

- (Good to have) Some multimedia coding experience.

- (Good to have) gstreamer coding experience.

- (Good to have) JPEG / MJPEG understanding.

- (Good to have) Wireshark / Reverse engineering experience.

# Contacts

- Potential Mentors: @mithro @micolous @thaytan

- Mailing list: [email protected]

- gstreamer mailing list: []()

Issue details

[Veyepar] Use avahi / zeroconfig to find our hosts on the network

Issue body

Brief explanation

We’d like to worry about fewer things, who wouldn’t.

If we’re setting a room with a couple of computers to record a talk, we shouldn’t have to configure the network, it should “just work”.

Avahi makes this easy, farther away host take longer to respond.

Make a setup program that updates the configs for either DVSwitch or GSTSwitch.

## Expected results

# Detailed Explanation

A much longer description of what the thing you want to do.

## Further reading

- https://code.google.com/p/pybonjour/

- Avahi examples;

- See PythonPublishExample for a relatively full service publisher using dbus-python.

- See PythonBrowseExample for a simple service browser using dbus-python

# Knowledge Prerequisites

*

# Contacts

- Potential Mentors: TimVideos / Veyepar Team

- Mailing list: [email protected]

Issue details

[streaming-system] TimVideos.us website (viewing interface) improvements

Issue body

Some more details at Streaming system Issue #42.

# Brief explanation

Make the timvideos.us website (the viewing interface for the streams) dynamically generated from a database rather then the config file. This will also include improving the frontend website.

## Expected results

- Updates to the Frontend part of the timvideos.us website.

# Detailed Explanation

This will also include improving the frontend website to support things like;

- Proper theming of each channel. (Themes seperate from channels are needed so you can define a “Linux.conf.au 2015 theme” which is then used by multiple channels.)

- Adding conference level pages (currently a version of the front page which groups together channels for each conference and supports theming for that conference).

- Reworking the front timvideos.us page.

- Accounts for control over each channel (admin level only, no users level accounts).

- Admin interface for configuring channels.

- Web base control of schedule download.

- Support for proper backing up of the website.

## Further reading

- Link to a PDF document

- Link to a some HTML page

# Knowledge Prerequisites

- Django and Python web application development.

- Graphic design experiance very useful.

# Contacts

- Potential Mentors: @iiie @mithro

- Mailing list: [email protected]

Issue details

[Veyepar] JSON schedule output into website

Issue body

Brief explanation

Zookeepr / Symposium (PyCon Website) JSON schedule output into website + veyepar

Feed validator!!!!

# Problem

The Steaming UI and Processing videos relies on the talk schedule. This data is typically in the conference website. There is sometimes an API available,

These APIs are not always accurate, example they may be missing the Keynote entries, or the start time is on a date that is not part of the conference.

Things that will help:

- A spec defining what data is needed.

- A validater to inspect provided data. (command line to validate a local file, public facing web page that will let conference web site developers test their API.)

- Work with the open source conference site projects to add the API to their codebase.

There are 3 popular conference systems:

http://zookeepr.org - https://github.com/zookeepr/zookeepr

http://pentabarf.org - https://github.com/nevs/pentabarf

http://eldarion.com/symposion/ - https://github.com/pinax/symposion

All 3 have schedule exports - examples:

http://www.pytennessee.org/api/schedule_json/

http://lca2013.linux.org.au/programme/schedule/json

https://fosdem.org/2014/schedule/xml

These two systems consume it:

https://github.com/timvideos/streaming-system

https://github.com/CarlFK/veyepar/blob/master/dj/scripts/addeps.py

A description of the data is at the top of addeps.py

and a little more http://nextdayvideo.com/page/metadata.html

https://github.com/CarlFK/veyepar/blob/master/dj/scripts/addeps.py#L288

stores the data to the model

https://github.com/CarlFK/veyepar/blob/master/dj/main/models.py#L228

The reason addeps.py is 2200 lines long is because the conference system coders keep changing things. mostly it is because the symposium system doesn’t have the code, so each time someone installs it they write their own. and things evolve too. like adding “what kind of license is it released under?”

symposium team has not accepted the pull request that will help stabilize the API

https://github.com/pinax/symposion/pull/45

# Contacts

- Potential Mentors: TimVideos/Veyepar team

Issue details

[HDMI2USB] Create a serial port extension board - Support both RS232 and RS485 modes.

Issue body

Brief explanation

Create a serial port extension board - Support both RS232 and RS485 modes

Should also have lots of blinking lights.

Firmware should be modified so that they appear as USB-CDC ports to the computer.

# Detailed Explanation

This can be achieved using SP331 transceiver.

The SP331 is a programmable RS-232 and/or RS-485 transceiver IC. The SP331 contains four drivers and four receivers when selected in RS-232 mode; and two drivers and two receivers when selected in RS-485 mode. The SP331 also contains a dual mode which has two RS-232 drivers/receivers plus one differential RS-485 driver/receiver.

The RS-232 transceivers can typically operate at 230kbps while adhering to the RS-232 specifications. The RS-485 transceivers can operate up to 10Mbps while adhering to the RS-485 specifications. The SP331 includes a self-test loopback mode where the driver outputs are internally configured to the receiver inputs. This allows for easy diagnostic serial port testing without using an external loopback plug. The RS-232 and RS-485 drivers can be disabled (High-Z output) by controlling a set of four select pins.

## Further reading

- HDMI2USB Website

- HDMI2USB-misoc-firmware

- Migen / MiSoC

# Contacts

- Potential Mentors: @mithro @enjoy-digital @shenki

- Mailing list: [email protected]

Issue details

[HDMI2USB] HDMI Audio to USB Sound

Issue body

More technical details at

- HDMI2USB-misoc-firmware #160

- HDMI2USB-jahanzeb-firmware #15

# Brief explanation

Push audio from HDMI to USB sound in the HDMI2USB project.

## Expected results

HDMI audio is captured on a computer connected to the HDMI2USB device.

# Detailed Explanation

HDMI supports sending audio over the interface (in the blanking areas called data island). This audio should be captured and sent up the USB interface.

## Further Reading

- HDMI2USB Website

- Migen / MiSoC

- HDMI2USB-misoc-firmware

- USB Audio specification

- HDMI Data Islands

- HDMI Audio

# Contacts

- Potential Mentors: @shenki @mithro @enjoy-digital

- Mailing list: [email protected]

Issue details

[HDMI2USB] Create a 3G-SDI extension board for HDMI2USB

Issue body

More technical details at

- HDMI2USB-misoc-firmware #158

- HDMI2USB-jahanzeb-firmware #11

# Brief explanation

Add support for 3G Serial digital interface for the HDMI2USB.

This project will let us push and pull lots of data over a wider range of protocols / cables / system.

# Detailed Explanation

SDI interface is used by a large number of high end devices. It would be good for the HDMI2USB firmware to support the standards and protocols.

This will probably require development of a TOFE expansion board which has SDI connectors, otherwise some way to adapt the DisplayPort connector to SDI.

## Further reading

- SDI Information - http://en.wikipedia.org/wiki/Serial_digital_interface

- Xilinx Information - http://www.xilinx.com/products/intellectual-property/SMPTE_SDI.htm

- TOFE Interface

# Contacts

- Potential Mentors: @shenki @mithro @enjoy-digital

- Mailing list: [email protected]

Issue details

timvideos/streaming-system

Change frontend to be static website

Issue body

The current frontend is a mixture of client side and server generated content. For example, each group has its own set of JS functions generated when the index page is rendered on the server. Instead of doing this, move to a purely standalone frontend that interacts with the backend via API calls.

Issue details

dvswitch incorrectly crops images in previews when running 16:9 AR

Issue body

The output feed is correct, but the display shown to the dvswitch operator is wrong.

This is what is shown in dvswitch to the operator. Note that the 6 is cut off on the right side, and part of the flower is cut off on the left side.

This is the DV feed from the dvswitch protocol, as displayed in totem and recordings.

Issue details

Support "in-room" template when behind CloudFlare

Issue body

Previously we used X-Forwarded-For but CloudFlare doesn’t seem to use that. Also unsure how it interacts with nginx.

Found the following article;

https://support.cloudflare.com/hc/en-us/articles/200170986-How-does-CloudFlare-handle-HTTP-Request-headers-

Issue details

Probably don't need seconds in the talk next time

Issue body

Issue details

No quick way to see the details about a talk (more info causes you to leave the site)

Issue body

Quick fix:

- open the link in new window

Longer fix:

- get the long descriptions in some type of pop-up / fold out / etc

Issue details

Can't generate preview images from a YouTube stream

Issue body

Need a gstreamer / mplayer / something else to create preview images.

Issue details

Googlebot cannot access CSS and JS files on http://timvideos.us/

Issue body

To: Webmaster of http://timvideos.us/,

Google systems have recently detected an issue with your homepage that affects how well our

algorithms render and index your content. Specifically, Googlebot cannot access your JavaScript

and/or CSS files because of restrictions in your robots.txt file. These files help Google understand

that your website works properly so blocking access to these assets can result in

suboptimal rankings.

Issue details

runall.sh wont run a 2nd time

Issue body

juser@trist:~$ streaming-system/tools/setup/runall.sh

when it errors, and I fix the error, I get other errors like these two

fatal: destination path ‘gst-plugins-dvswitch’ already exists and is not an empty directory.

- sudo ln -s /usr/local/lib/gstreamer-0.10/libgstdvswitch.so /usr/lib/gstreamer-0.10/

ln: failed to create symbolic link ‘/usr/lib/gstreamer-0.10/libgstdvswitch.so’: File exists

Issue details

install missing gs...-dev deps

Issue body

configure: error:

You need to install or upgrade the GStreamer development

packages on your system.

I did, it seemed to fix this.

juser@trist:~$ sudo apt-get install libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev

I am not sure where it errored, maybe this can help

Makefile.am: installing ‘./INSTALL’

src/Makefile.am: installing ‘./depcomp’

autoreconf: no config.status: cannot re-make

autoreconf: Leaving directory `.’

checking for a BSD-compatible install… /usr/bin/install -c

checking whether build environment is sane… yes

checking for a thread-safe mkdir -p… /bin/mkdir -p

checking for gawk… gawk

checking whether make sets $(MAKE)… yes

checking whether make supports nested variables… yes

checking whether to enable maintainer-specific portions of Makefiles… yes

checking for gcc… gcc

checking whether the C compiler works… yes

checking for C compiler default output file name… a.out

checking for suffix of executables…

checking whether we are cross compiling… no

checking for suffix of object files… o

checking whether we are using the GNU C compiler… yes

checking whether gcc accepts -g… yes

checking for gcc option to accept ISO C89… none needed

checking whether gcc understands -c and -o together… yes

checking for style of include used by make… GNU

checking dependency style of gcc… gcc3

checking build system type… x86_64-unknown-linux-gnu

checking host system type… x86_64-unknown-linux-gnu

checking how to print strings… printf

checking for a sed that does not truncate output… /bin/sed

checking for grep that handles long lines and -e… /bin/grep

checking for egrep… /bin/grep -E

checking for fgrep… /bin/grep -F

checking for ld used by gcc… /usr/bin/ld

checking if the linker (/usr/bin/ld) is GNU ld… yes

checking for BSD- or MS-compatible name lister (nm)… /usr/bin/nm -B

checking the name lister (/usr/bin/nm -B) interface… BSD nm

checking whether ln -s works… yes

checking the maximum length of command line arguments… 1572864

checking whether the shell understands some XSI constructs… yes

checking whether the shell understands “+=”… yes

checking how to convert x86_64-unknown-linux-gnu file names to x86_64-unknown-linux-gnu format… func_convert_file_noop

checking how to convert x86_64-unknown-linux-gnu file names to toolchain format… func_convert_file_noop

checking for /usr/bin/ld option to reload object files… -r

checking for objdump… objdump

checking how to recognize dependent libraries… pass_all

checking for dlltool… no

checking how to associate runtime and link libraries… printf %s\n

checking for ar… ar

checking for archiver @FILE support… @

checking for strip… strip

checking for ranlib… ranlib

checking command to parse /usr/bin/nm -B output from gcc object… ok

checking for sysroot… no

checking for mt… mt

checking if mt is a manifest tool… no

checking how to run the C preprocessor… gcc -E

checking for ANSI C header files… yes

checking for sys/types.h… yes

checking for sys/stat.h… yes

checking for stdlib.h… yes

checking for string.h… yes

checking for memory.h… yes

checking for strings.h… yes

checking for inttypes.h… yes

checking for stdint.h… yes

checking for unistd.h… yes

checking for dlfcn.h… yes

checking for objdir… .libs

checking if gcc supports -fno-rtti -fno-exceptions… no

checking for gcc option to produce PIC… -fPIC -DPIC

checking if gcc PIC flag -fPIC -DPIC works… yes

checking if gcc static flag -static works… yes

checking if gcc supports -c -o file.o… yes

checking if gcc supports -c -o file.o… (cached) yes

checking whether the gcc linker (/usr/bin/ld -m elf_x86_64) supports shared libraries… yes

checking whether -lc should be explicitly linked in… no

checking dynamic linker characteristics… GNU/Linux ld.so

checking how to hardcode library paths into programs… immediate

checking whether stripping libraries is possible… yes

checking if libtool supports shared libraries… yes

checking whether to build shared libraries… yes

checking whether to build static libraries… yes

checking for pkg-config…

checking for pkg-config… /usr/bin/pkg-config

checking pkg-config is at least version 0.9.0… yes

checking for GST… no

configure: error:

You need to install or upgrade the GStreamer development

packages on your system. On debian-based systems these are

libgstreamer1.0-dev and libgstreamer-plugins-base1.0-dev.

on RPM-based systems gstreamer1.0-devel, libgstreamer1.0-devel

or similar. The minimum version required is 1.2.1.

configure failed

Issue details

Graphs doesn't really show all stream details

Issue body

See screenshot below;

Issue details

Improve the existing graphs using Chart.js

Issue body

| Improve the existing graphs using [Chart.js | Open source HTML5 Charts for your website](http://www.chartjs.org/) |

Issue details

Trying to fix the watchdog wrapper.

Issue body

Issue details

website: "irclog" group variable attempts to interpolate strings

Issue body

At present, the irclog variable attempts to interpolate strings (ala sprintf format strings).

This shouldn’t be done, because if you have a irclog URI containing a %, this isn’t included correctly in the page. So the value:

http://room-logs.timvideos.us/%23%23lca2015-fp/preview.log.html

Is instead included as

http://room-logs.timvideos.us/ %23lca2015-fp/preview.log.htm

Issue details

Port data.py to read from a database instead

Issue body

At present the schedule scraper writes out a bunch of Python code which is imported. This isn’t very nice to use, port this to use some database model instead.

Issue details

Implement admin.py

Issue body

None of the models inside of the website have Django Admin defined. This needs to be implemented.

Issue details

*Don't Merge* - Docker

Issue body

@maxstr ‘s changes for docker, however has lots of issues.

Lots of files here shouldn’t be added (IE tools/flumotion-config/pusher/lib/*)

Issue details

Various setup.sh fixes

Issue body

This was failing on a brand new installation (worked fine for redeploying where it had already been used). Some fixes. Works here now on a brand new Ubuntu 12.04 install.

Issue details

Rip out a lot of cruft from flumotion

Issue body

- Crazy module import system.

- Crazy module download system.

- All the admin stuff - replace with JSON endpoints.

- Merge the many repositories.

- Rename the project.

Issue details

Create "configuration" django app to replace the config.json file

Issue body

Related to the idea of moving the configuration to a database.

Issue details

Move schedule RSS+JSON feeds into their own django app

Issue body

Maybe use a database or something rather than the data.py file.

Issue details

Setup nginx-rtmp-module to create hls/dash versions of the flumotion stream.

Issue body

I was unable to get flumotion working with hls. This module might be a way around it.

Issue details

Change from jwplayer to using video.js, projeckktor or mediaelementjs

Issue body

While jwplayer is technically “open source” its CC-BY-NC which is not free. Video.js and preojekktor look like decent really open source replacements.

Issue details

Investigate gstreamer-streaming-server

Issue body

http://mindlinux.wordpress.com/2013/10/22/latest-gstreamer-streaming-server-features-video-on-demand-smooth-streaming-and-drm-david-schleef-rdio-inc/

http://superuser.com/questions/443411/http-streaming-with-gst-launch-gstreamer

if you want stream via net, start you web server and correct pipe:

gst-launch-1.0 videotestsrc is-live=true ! x264enc ! mpegtsmux ! hlssink max-files=5 playlist-root=http://server.com location=/var/www/hlssink playlist-location=/var/www/hlssink

https://coaxion.net/blog/2014/05/http-adaptive-streaming-with-gstreamer/

http://cgit.freedesktop.org/gstreamer/gst-plugins-bad/tree/ext/hls

Issue details

Replace JWPlayer with fully free (libre) software equivalent

Issue body

JWPlayer is released under a CC BY-NC-SA 3.0 – the NC (non-commercial) part means it doesn’t satisfy the Debian Free Software Guidelines (DFSG) and hence is not truely “free (libre) software”.

The choice of using JWPlayer was made back when flash video support was needed because HTML5 video was not supported by a majority of browsers (JWPlayer was the only option with allowed for seamless flash and HTML5 integration). This is no longer the case with almost all browsers supporting HTML5! Flash support is only needed for RTMP adaptive streaming, which we don’t really use/support at the moment.

We should either write our own replacement or convert to using an existing FOSS video player.

Features we need are;

- HTML5 support

- Support for multiple video format support (WebM / H264) – must auto detect.

- Support for multiple quality levels (SD, HD, audio only, etc) – must auto detect.

Ideally we’d love to support are;

- Some type of DVR / seek into the past support.

- Adaptive streaming (https://developer.mozilla.org/en-US/docs/DASH_Adaptive_Streaming_for_HTML_5_Video)

- Mobile support

- YouTube Live event support / Amazon Cloud Front backend support (probably need RTMP support for this?)

- Great statistic / analytics support

Issue details

vagrant to demonstrate install

Issue body

Issue details

Create a docker package for flumotion

Issue body

Currently we have 1 flumotion per stream. This currently uses 1 machine per stream as managing the configuration of multiple streams on one machine is to hard.

We should package flumotion up in a docker thingy - https://www.docker.io/

“Docker is an open-source project to easily create lightweight, portable, self-sufficient containers from any application. The same container that a developer builds and tests on a laptop can run at scale, in production, on VMs, bare metal, OpenStack clusters, public clouds and more. “

# Flumotion in the TimVideos streaming system

There are 3 types of flumotion configurations; they should share a base docker image but also be provides a separate docker image correctly configured.

- Collector - runs on site at a conference and does light encode to compress data so you can send it over the internet to an encoder.

- Requires dvswitch support.

- Encoder - runs in the cloud and converts video from the light encode format to something suitable for people to view in their browser.

- Repeater/Amplifier - runs in the cloud and streams a copy of the encoder output. Allows the system to scale past the limits of a single machine.

There are a couple of support applications we use with flumotion; these should be installed inside the docker image and started as part of the container.

- watchdog - Restarts failed components and does a full system restart when things go totally wrong.

- register - Registers with the tracker website (so the stream can be found) and sends statistics and logs for reporting and load balancing support.

Issue details

Add "lip sync" or other delay detector

Issue body

Issue details

Add documentation on how to contribute to the project

Issue body

Add documentation on how to contribute that you can point to as a starting point for new people to read on how to contribute. For example, when a new person shows up in irc, you’d point them to this.

As for where the documentation could live – github treats CONTRIBUTING.md as a first class citizen, so you could put the documentation in that, and then also put it in the README as a link. Or, you could document it on the wiki and have a link to that in CONTRIBUTING and the README.

Issue details

Track client side video quality and performance

Issue body

We should be collecting video quality and performance stats on the client side and then report on them. Maybe through Google Analytics?

# Firefox

- mozParsedFrames - number of frames that have been demuxed and

extracted out of the media.

- mozDecodedFrames - number of frames that have been decoded -

converted into YCbCr.

- mozPresentedFrames - number of frames that have been presented to

the rendering pipeline for rendering - were “set as the current

image”.

- mozPaintedFrames - number of frames which were presented to the

rendering pipeline and ended up being painted on the screen. Note that

if the video is not on screen (e.g. in another tab or scrolled off

screen), this counter will not increase.

- mozFrameDelay - the time delay between presenting the last frame

and it being painted on screen (approximately).

# Webkit

- webkitAudioBytesDecoded - number of audio bytes that have been decoded.

- webkitVideoBytesDecoded - number of video bytes that have been decoded.

- webkitDecodedFrames - number of frames that have been demuxed and

extracted out of the media.

- webkitDroppedFrames - number of frames that were decoded but not

displayed due to performance issues.

Issue details

Move room configuration from config.json to database

Issue body

Issue details

Create a web-rtc flumotion component

Issue body

WebRTC is awesome, lets see if we can use it?

Issue details

Change setup scripts to some type of configuration management system

Issue body

Issue details

Port website to new style "Class Based Views"

Issue body

https://docs.djangoproject.com/en/dev/topics/class-based-views/

Issue details

Upgrade to jwplayer 6.0

Issue body

We currently use jwplayer 5.0 which is the previous version of the player. The new version includes a lot of new features such as;

- Extended device support, including mobile support

- Built in support for Captions (is this useful?)

- Improved Javascript API

- Improved analytics, including a lot more information

- Improved streaming support, including inbuilt quality selection and better adaptive support

- Better embedding and quicker loading.

Some further details about migrating can be found at http://www.longtailvideo.com/support/jw-player/28834/migrating-from-jw5-to-jw6 and http://www.longtailvideo.com/support/bits-on-the-run/31912/upgrading-players-to-jw6/ and http://www.jwplayer.com/upgrade-steps/

While eventually we hope to move to a completely FOSS solution (see issue #60), upgrading to the latest JWPlayer would give us a lot of extra functionality quickly so it is probably still worth doing.

Issue details

Refactor the Javascript to use classes

Issue body

Issue details

Replace watchdog with something that uploads component status to website

Issue body

Make a status page for each encoder.

Issue details

Generate minimized Javascript/CSS output

Issue body

Issue details

Add better client side error report

Issue body

This project aims to create an automated bug reporting system for TimVideos website. The system performs automated data collection for debugging purposes and this data is transferred to a database. An admin can then view the reported bugs, give them a priority, mark them as resolved etc.

https://github.com/timvideos/getting-started/issues/4

Issue details

Finish blackmagic component

Issue body

Should support both the opensource gstreamer component and the commercial one.

Issue details

Add "continuous frames" to Flumotion

Issue body

Create a flumotion component which produces blank frames when feeder runs out.

Checkout the inter stuff at on the portable.

Issue details

Create a Justin TV Flumotion component

Issue body

We have a justintv component using the pipeline using.

rtmpsink location=rtmp://live.justin.tv/app/%(justintv_key)s

According the following page http://apiwiki.justin.tv/mediawiki/index.php/VLC_Broadcasting_API

enc=x264{keyint=60,idrint=2},vcodec=h264,vb=300,acodec=mp4a,ab=32,channels=2,samplerate=2205

Issue details

Add JavaScript analytics

Issue body

The system has a nice stat tracking feature. Make the frontend record everything possible about the client experiences.

Issue details

Merge DVSwitch, DVfifo and Firewire flumotion components.

Issue body

They have 95% common code. Refactor them to have a common base class.

Issue details

Add control over twinpac (via IR sender)

Issue body

Currently looking at IRtoy. Write a config file which lets us control the twinpac (and automatically make it all happy).

Issue details

Monitoring to everything!

Issue body

We need a better way to monitor everything, munin seems like the best bet.

Each encoder/collector/amplifier should register on the main server.

Issue details

Push preview images to S3

Issue body

Preview images are consuming a massive amount of bandwidth. Pushing them to S3 or similar CDN would be awesome.

Issue details

Add a bug reporting functionality

Issue body

Create a website/webpage which records information as requested below;

If you have other problems like the sound being quiet or the display being wrong in anyway, or if you can't get the stream to work, please send me an email with the following details;

* Browser Type (Chrome, Safari, IE, etc)

* Exact Browser Version (a screenshot of the about page would be awesome!)

* Operating System - as much detail as possible (IE Ubuntu Lucid 64bit)

* The speed and type of Internet connection you have. (IE ADSL, Cable, ADSL2 and 256k, 6M). You can find this information in your ADSL model.

* Please go to http://www.speedtest.net/ and send me the numbers the widget reports.

* Anything else you think is important.

This is meant to be used by people watching a live video stream. They may be technical, but we can't rely on that, and really we want the process to be as automated as possible. The more work the user has to do, the less they will use it.

When live events are streamed (like a conference) we will hear reports later of problems. Generally that is too late and we won't know what the problem was, so we don't know what to fix so it doesn't happen again. It would be nice if there was a system to capture the details.

Issue details

Add auto-debugging

Issue body

- Does the video work? - Switch to HD off

- Does the video work, now? - Switch to Flash

- Any luck now? - Switch to Justin.tv

- Still nothing? - Switch to Audio only